An ELI5 guide to RAG, a framework that helps large language models to generate more accurate and up-to-date answers

In the context of large language models, generation refers to that part of large language models that generate text in response to a user query. This is referred to as a prompt, just like how ChatGPT works. You ask a question, then it generates an answer for you. But like everything in AI-ML, while generating these answers it’s not 100% accurate.

In this blog we discuss the two challenges a large language model (LLM) is prone to and how RAG can help us overcome these issues.

Large Language Models: Interaction Challenges

Two behaviors are considered problematic while interacting with LLMs:

- Out-of-date information

- No source while answering a particular query

Large language models are everywhere – they get some things amazingly right and other things very interestingly wrong!

Let’s try to understand why this is a problem using a cosmic example.

There always has been intense debate about whether Jupiter or Saturn has the highest number of moons. If you asked me a while back I would have said Jupiter, since I once read an article about new moons being discovered for the biggest planet of our solar system. But Saturn regained its ‘planet in the solar system with the most moons’ crown just months after being overtaken by its fellow gas giant. The leapfrog comes after the discovery of 62 new moons of Saturn, bringing the official total to a whopping 145. But since I was asked this before I was aware of this discovery, my answer was not up-to-date, i.e. problem number one.

Asking the same question to someone who doesn’t have a special interest in astronomy would probably give you the same answer. That’s because it is their ‘best guess’, or perhaps an incorrect assumption that because Jupiter is the biggest planet it probably has the highest number of moons too. Therefore, this answer is based on no source or a judgment hallucination, i.e. problem number two.

Introducing Retriever Augmented Generation

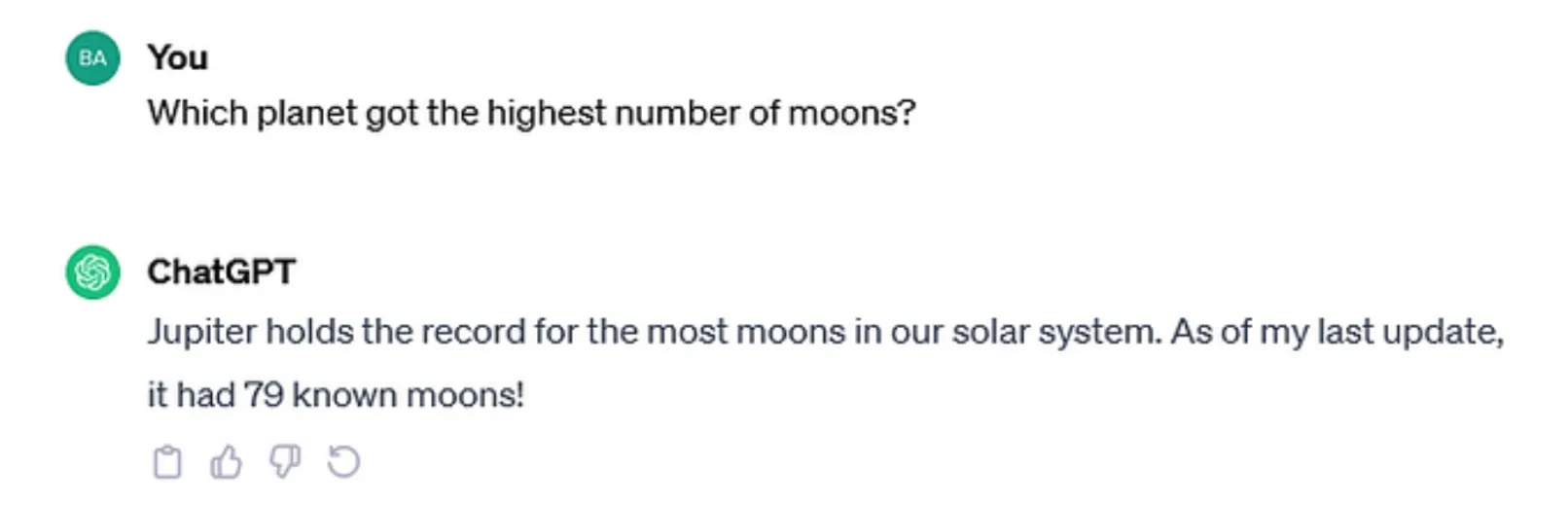

Let’s ask the same “how many moons” question with the ChatGPT.

As you can see, it also mentions this information is only valid as per its last update. If we need our model to answer this question correctly, we would need to retrain our LLM, which is not always feasible. In addition, it would be very challenging and uneconomical to keep your LLMs ‘in the swim’.

That’s where Retriever Augmented Generation (RAG) comes in, a strategy that helps address both LLM hallucinations and out-of-date training data. Pairing the LLMs with this architecture boosts its capabilities despite spending time and money on additional training.

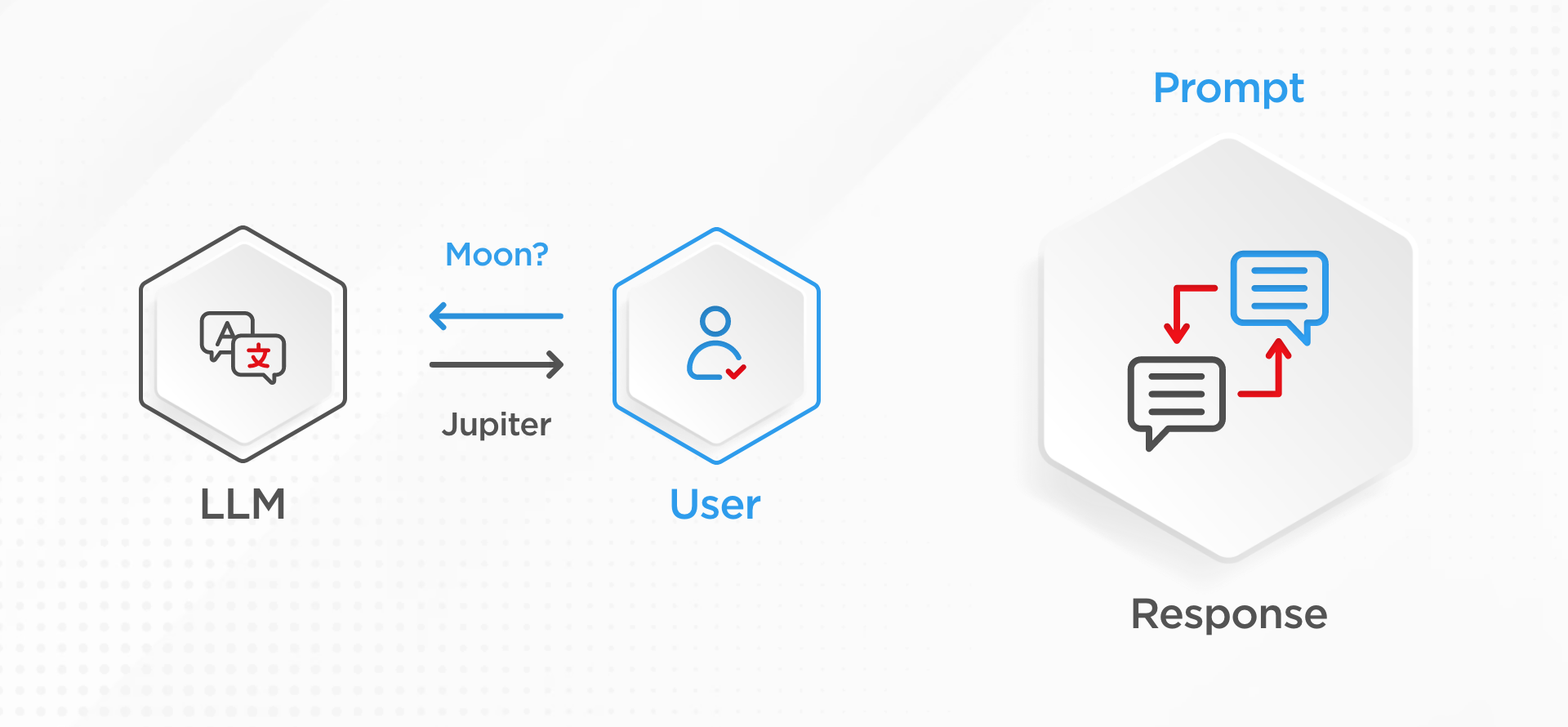

The way large language works is that the user asks the question, also known as a prompt, about moons, and an LLM will confidently answer “Jupiter” since it knows only that from the parameters during the training. The LLM would be quite confident while doing this response generation, although it would most likely be wrong.

However, when you add retrieval augmentation with this generation process, instead of relying on just what LLM knows, we would add content store data. This content store data could be anything: a collection of documents, internal or external databases, or even the open internet. By doing this, LLM now has an instruction set provided along with the prompt that says:

- First, go and look into this content store for the related information that is contextually relevant and combine that with the user’s question.

- And only then generate the response along with the evidence of why the response was what it was.

In Summary

I hope now you can visualize how RAG helps you to overcome those challenges we saw earlier.

- Outdated: Now instead of retraining the model, if new information becomes available, like the discovery of moons, all you have to do is augment the data store with the new information. In doing so, the language model can provide an updated and more relevant answer by retrieving the most up-to-date information.

- Source: The large language model is now instructed to pay attention to the primary source data before giving its response. The ability to provide evidence will make it less likely to hallucinate, because now it is asked to be less reliant on the outdated data it is trained upon.

There is still a lot of research being conducted on how to improve these model performances from both ends. For example, to improve the retriever to give the LLM the best quality of data on which to ground its response, and also the generative part so that LLM can give the richest and best response to the user.