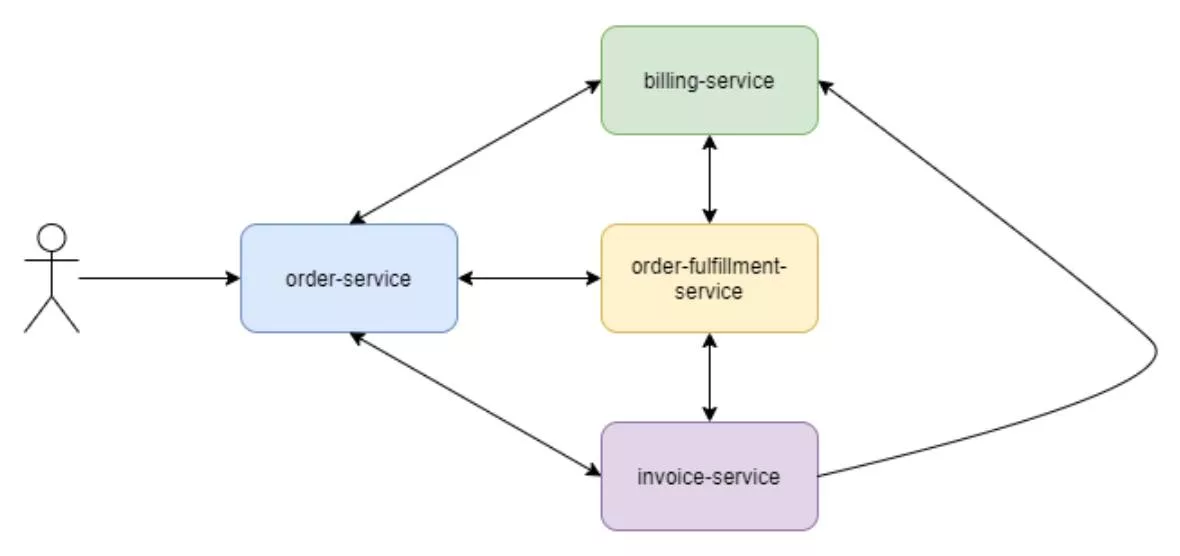

A common pitfall with microservice design is to have direct communication between several services. This leads to multiple transactions across multiple services in order to complete a single top-level transaction. In essence, a monolith was created which evolved into a distributed monolith. It’s the worst part of both paradigms.

For the purposes of this article, a transaction represents a request that triggers complex interactions between microservices, and to provide clarity some diagrams have been simplified and may not include certain implementation details.

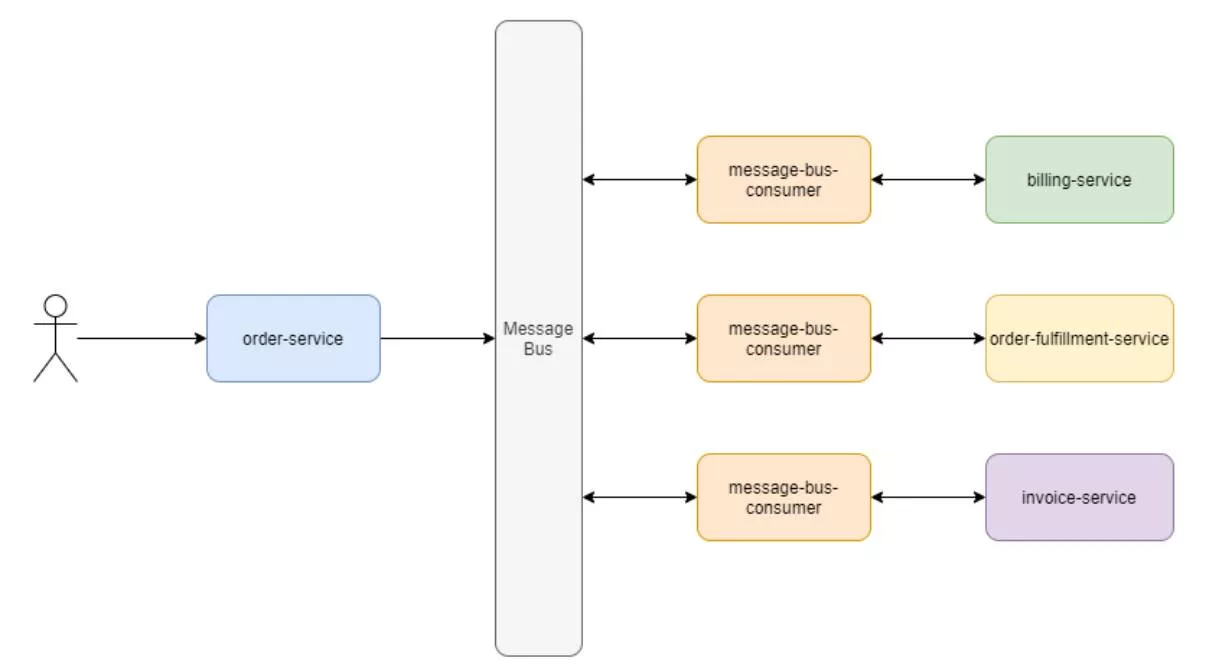

A common strategy for resolving this issue is to utilize a message bus to decouple the services. The advantages of this approach include:

- Asynchronous downstream processing when needed or wherever possible.

- Uninterrupted transaction processing in the event of a service outage.

- Automatic transaction re-queuing and reprocessing following an outage or after a bug fix has been pushed to production.

- New processing steps can be added by adding another consumer to the message bus.

There are a variety of technologies that could be used in the implementation above, such as SQS, SNS, RabbitMQ, Kafka, Redis, ActiveMQ, etc. The consumer implementation will be dependent on the broker used, but it will contain common elements:

- Retry logic for requests to the services.

- The ability to acknowledge, or unacknowledge (unack in system), the receipt of a message and its processing success or failure.

- Saturating the data in the bus for any downstream dependencies, i.e. IDs or instances of newly created transactions, transaction metadata, etc.

- Producing new messages to trigger downstream processing.

- Auto-scaling capabilities based on the queue or topic size in the message bus. Just like the auto-scaling capabilities of the services themselves, the consumers and producers can scale.

Solving the Modelling Problem with BPMN

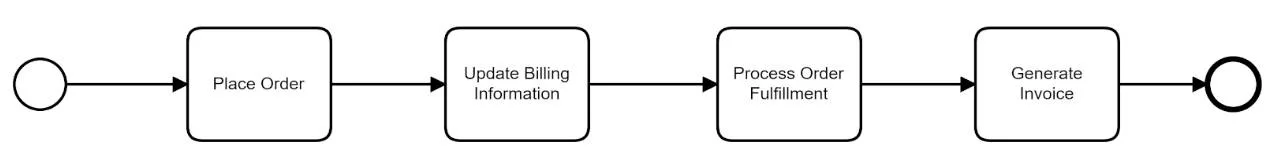

Engineers often use finite state machines (FSM) to represent state, backend processes, etc. While several notation standards exist that can be used to represent finite state machines, we often use basic flow charts to represent FSMs. Business Process Model Notation (BPMN) offers a standard that can be understood by both engineering teams and business stakeholders for creating FSMs. The beauty of BPMN is that both the engineers and the business stakeholders speak the same language when discussing a business process, which eliminates the kind of “translation” errors we see when modeling the domain (Business Process Model and Notation (BPMN), Version 2.0).

For example, the BPMN diagram below represents the process for creating an order; the steps are clear and can be easily understood by both the business stakeholders and engineers:

These diagrams are not limited to communication. Through the use of a BPMN engine, the diagram above can be published to an engine that will invoke the steps. This allows us to utilize the same diagram to orchestrate the work that we used to model the process with the business.

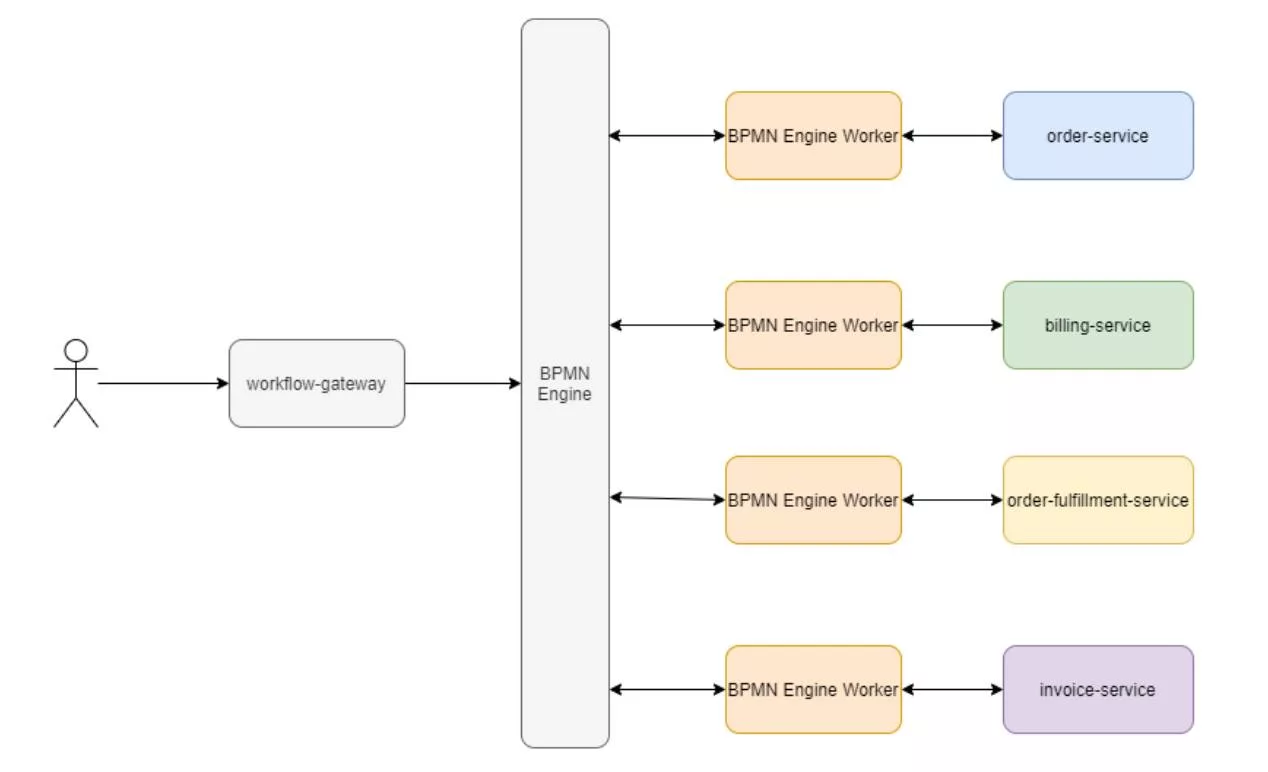

There are several ways that a BPMN engine can be integrated into a system to perform microservice orchestration. However, it’s important to note that depending on the engine used, not all engines offer the same level of capability and available features will vary. For example, Activiti focuses on providing core engine capabilities and rely on external infrastructure to perform tasks such as I/O. Other engines are really offered as platforms, such as Camunda which contains a core BPMN and rules engine but it ships with additional infrastructure and capabilities.

Therefore, the examples below will vary depending on which engine is used. [NB. Camunda is a fork of Activiti, so there is an overlap between those two particular engines.] In these examples, the BPMN Engine Worker could be a thread inside of the BPMN engine itself, or it could be an external component. For best practice, utilizing the external task pattern, where the work for the task is performed by a component external to the BPMN engine, is preferable to using script tasks for I/O or complex processing logic.

NB. All references to a “gateway” shown in the diagrams below denote some sort of interface (e.g., REST API, API GW, gRPC API, microservice, etc.) that sits in front of the components.

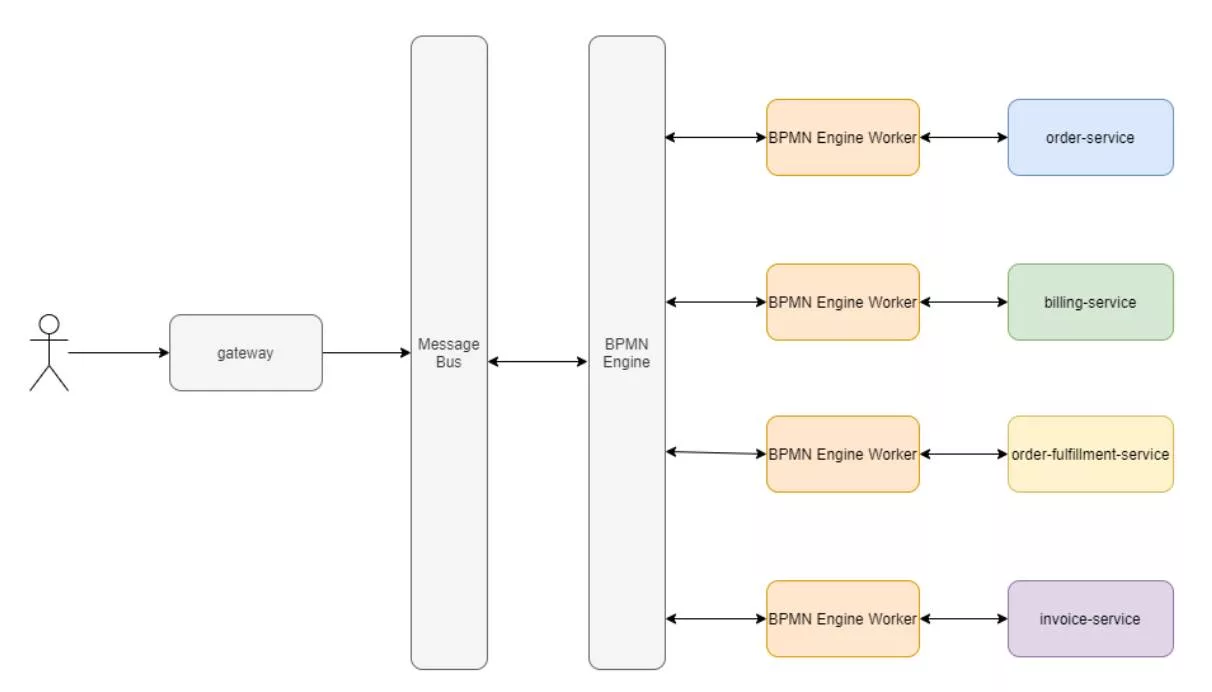

Microservice Orchestration with the BPMN Engine as the Message Bus

In the most basic example, a gateway-like service sits in front of the BPMN engine and the BPMN essentially serves as the message bus. This is possible with Camunda which has an internal message bus and allows for subscriptions to topics that correspond to tasks. The ‘workflow gateway’ depicted is some sort of logical component that communicates with the engine. Camunda ships with a REST API, so it has the capability built-in and the two logical components are the same deployed component.

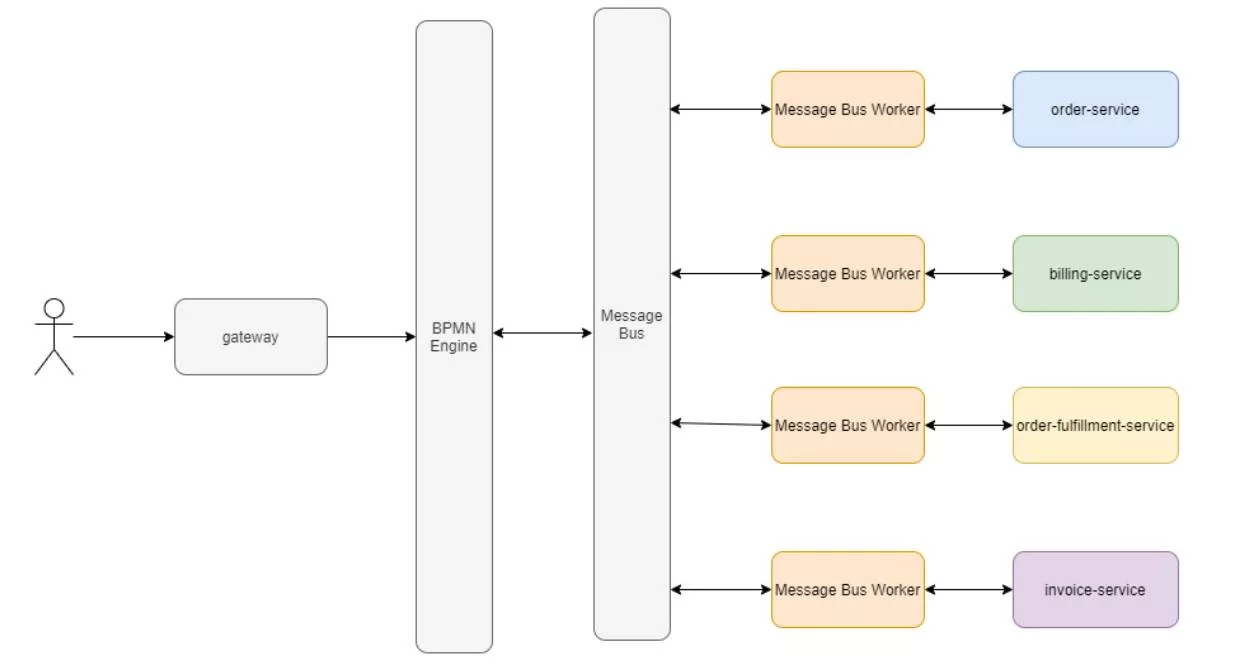

Microservice Orchestration with Dedicated Message Bus

Similar to the pattern where the engine is also used as a message bus, this pattern separates the message bus from the engine itself. In this case, the engine’s workflows are triggered by a message bus consumer responsible for invoking workflows:

This pattern can also be inverted, where the engine communicates with the message bus and the message bus consumers operate against the microservices:

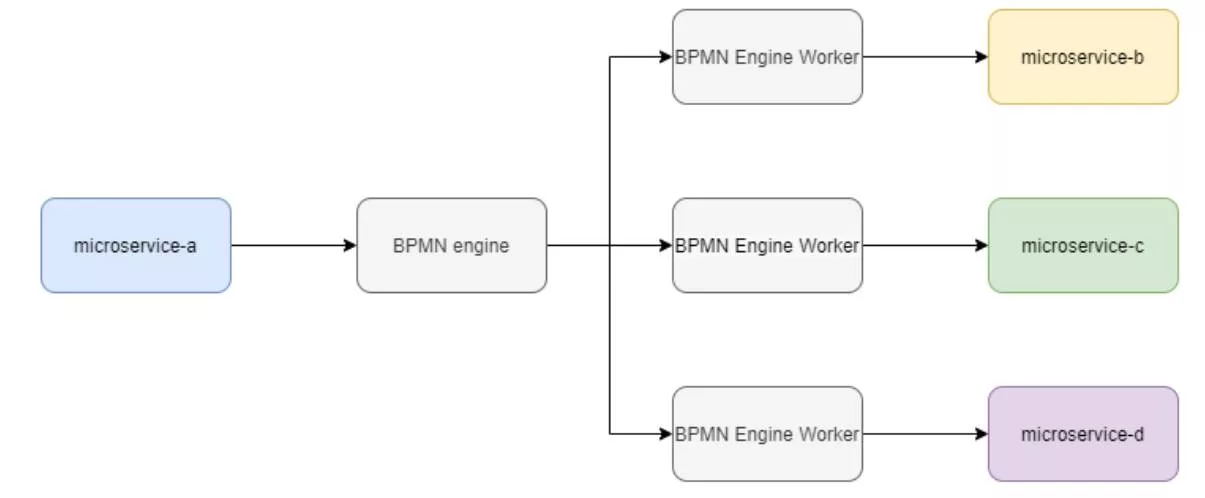

Engine/Workflow Instance per Microservice

In this scenario, each microservice ships with access to an engine/workflow instance that is specific to the microservice. This could be performed by embedding the BPMN engine into the service itself and shipping the engine as part of the logical microservice that runs in a separate process (think two containers for the service instead of one). Alternatively, using dedicated workflows in the main engine instance to individual microservices, as in this example Camunda implementation:

Which One is Best?

All of them. None of them. It is entirely dependent on the overall system architecture, goals, team composition, skill sets, etc. These are patterns and should be applied in the same manner as all other design patterns i.e., as a way to handle a cross-cutting concern or solve a particular problem.

The number of workers and microservices also needs to be considered. A disciplined team with strong DevOps skills will be better suited to handling the operational overhead of more complex patterns that involve more components and more frequent deployments. In most cases, a single process engine is sufficient, even with large-scale enterprise projects.

Worker Pattern Implementations

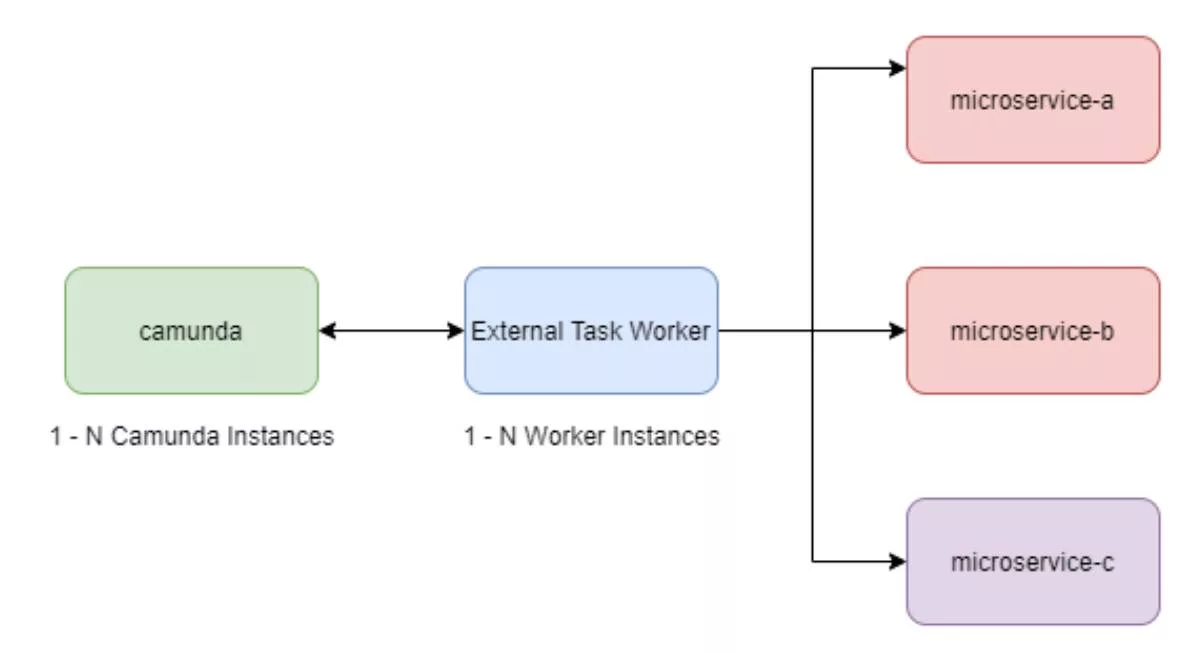

Since we are actively using Camunda, we will use examples from Camunda to keep any code examples or implementation details relevant to the team. The source code for the Camunda NodeJS Client can be found here.

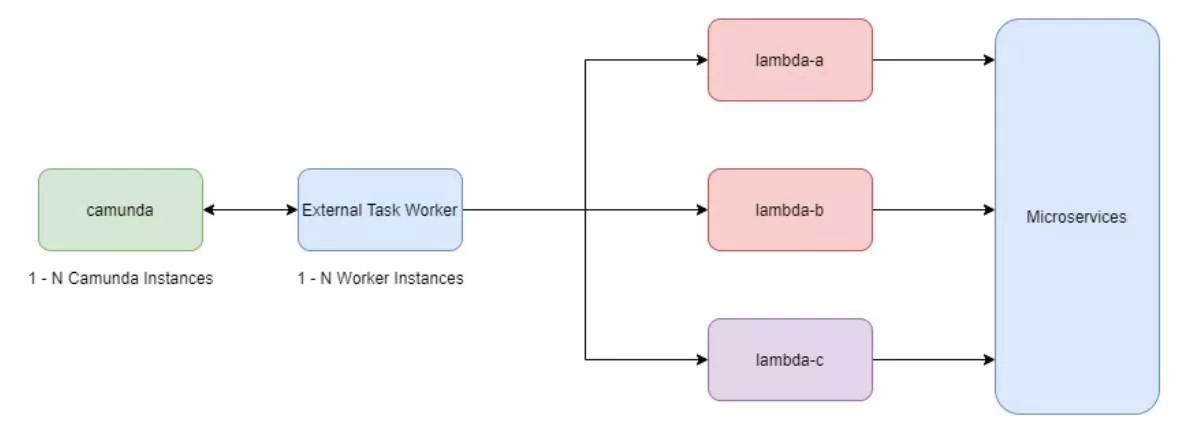

The key highlights of this pattern are:

- Camunda is horizontally scalable

- The external task worker(s) is horizontally scalable

- The microservices are horizontally scalable

With appropriate auto-scaling configurations, the system will be able to handle back pressure effectively and scale up and down as needed. If needed, the various patterns below can be used in combination.

Worker DTO

The best practice when using the External Task pattern is to define a DTO as a variable that enables dynamic data to be passed between the engine and the workers. It is also best to mutate as few variables as possible during processing and store references to the domain objects, rather than their actual data.

Since external tasks can be invoked asynchronously and in parallel during process execution, it is important to design the processes to handle these conditions. The workers should be unaware of other workers, so if a process can be branched and a series of actions are taken in parallel, the process will need to handle updating variables that may mutate based on the execution of a task.

Here is the task interface from the camunda-external-task-client-js:

1. export interface Task {

2. variables: Variables;

3.

4. // These are not guaranteed by package documentation, but are returned according to REST API docs

5. activityId?: string;

6. activityInstanceId?: string;

7. businessKey?: string;

8. errorDetails?: string;

9. errorMessage?: string;

10. executionId?: string;

11. id?: string;

12. lockExpirationTime?: string;

13. priority?: number;

14. processDefinitionId?: string;

15. processDefinitionKey?: string;

16. processInstanceId?: string;

17. retries?: number;

18. tenantId?: string;

19. topicName?: string;

20. workerId?: string;

21. }

To allow for data to be marshaled between the process instance, the task worker, and the services that the tasks are orchestrating, a variable is created that will store the DTO.

Example DTO in TypeScript:

1. export interface TaskResult {

2. data: any,

3. startTime: number,

4. endTime: number,

5. taskId: string

6. }

7.

8. export interface ProcessTaskResults {

9. correlationId: string, // this is created by an upstream producer when a message is first dropped onto a message bus

10. processMetadata: any, // This should contain pointers to any domain objects and any other data that could be important to a task

11. taskResults: Array<TaskResult> // Append only array of results. The process will know how to handle the results and can make decisions based off data returned by the task.

12. }

The DTO is serialized into a process instance variable. As tasks are executed, new results are pushed to the taskResults array. The process then uses the data from the external task invocation to move forward in the process. The advantages of this approach include:

- Script tasks only being necessary for very small pieces of logic that are better suited to running in the process itself. An example would be a process that branches and has parallel invocations of external tasks and a map-reduce needs to be performed at the end of the parallel stages to combine the task results.

- Basic logic, which is easier to perform with a minor script, such as checking a value in the task results and making a decision based on the value.

- Service outages or bugs in microservices or worker code that cause failures can be fixed without making a change to the process and redeploying the process itself. If a service fails or returns a particular error code, the worker will raise a task failure or BPMN error. These will raise incidents that can be used to retrigger the failed step in bulk once a service’s health is restored.

Generic External Worker

This is the simplest way to perform microservice orchestration:

1. import {

2. Handler,

3. HandlerArgs,

4. Variables,

5. Client,

6. logger

7. } from ‘camunda-external-task-client-js‘;

8.

9. import * as dotenv from ‘dotenv‘;

10. import path from ‘path‘;

11. import { createWorkerConfiguration } from ‘./factories‘;

12.

13. import { makeApiCall, createTaskResult, setTaskResult } from ‘./utils‘;

14.

15. export const callApiHandler: Handler = async ({

16. task,

17. taskService,

18. }: HandlerArgs) => {

19. try {

20. const processMetadata = task.variables.get(‘ProcessTaskResults‘).get(‘processMetadata‘);

21.

22. const apiCallResult = await makeApiCall(processMetadata);

23. const taskResult = createTaskResult(apiCallResult);

24. setTaskResult(task, taskResult);

25.

26. await taskService.complete(task, processVariables);

27. console.log(‘I completed my task successfully!!‘);

28. } catch (e) {

29. await taskService.handleFailure(task, {

30. errorMessage: “Task worker failure”,

31. errorDetails: e,

32. retries: 1,

33. retryTimeout: 1000

34. });

35. console.error(`Failed completing my task, ${e}`);

36. }

37. };

38.

39. dotenv.config({ path: path.join(__dirname, ‘.env’) });

40.

41. const workerConfig = createWorkerConfiguration(process);

42.

43. // TODO: define config for actual project

44. const camundaClientConfig = {

45. baseUrl: workerConfig.camundaRestUrl,

46. use: logger,

47. };

48.

49. // Create a Client instance with custom configuration

50. const client = new Client(camundaClientConfig);

51.

52. // Susbscribe to the topic: ‘apiCall’

53. client.subscribe(“apiCall“, async function({ task, taskService }) {

54. await callApiHandler(task, taskService);

55. });

This worker simply passes data between Camunda and microservices. For this pattern to work, the microservices must have some sort of standardized and generic DTO interface. If the services do not have a uniform generic DTO then other patterns can be used.

The advantage for this worker is that a simple iteration over a map of topics and a generic API call handler allows the client subscription to be configured externally, allowing new topics and endpoints to be mapped dynamically. This worker creates a homogenous orchestration layer and will require the up-front design to be implemented effectively. In most cases, this pattern is used when projects are green-field and enough up-front design is completed to allow for a DTO contract across services.

Lambda External Worker

This pattern works similarly to the Basic Worker Pattern in that the External Task Worker is responsible for mapping topics to Lambdas and marshaling data between the Lambda invocations and process instances. This pattern adds an additional layer of abstraction and scalability, however, it does add more complexity. It can be useful in scenarios where Lambdas are interacting with third-party systems or AWS services since the Lambdas can be reused elsewhere in the stack or abstract away any implementation details of interacting with third-party services.

Domain-Rich External Worker

This pattern is structurally similar to the Generic Worker Pattern, however, this worker will perform all fine-grained orchestration, understand various domain objects, and have various dependencies on microservices. For this pattern, it is best to separate the workers so that a worker handles a limited number of topics.

This pattern makes the interface between Camunda and the microservices coarser-grained than the Generic External Worker since this worker will understand significantly more about the infrastructure and microservice architecture. The Generic External Worker is effectively a way to wrap an API call to a microservice and requires a standardized DTO that all microservices understand.

If the implementation of a standardized DTO is not possible, this model allows the interface between Camunda and the workers to remain generic. However, the worker must understand what to do with the data rather than simply mapping topics in a one-to-one relationship with API calls. There is potential for this pattern to allow for simpler BPMN and enable implementations to handle any technical hurdles. For example, legacy system interoperability that forces heterogeneous worker implementations.